You’re Using AI Wrong (and it’s not your prompts)

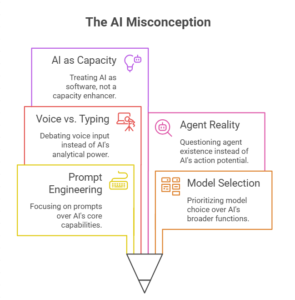

Most people are having the wrong argument about AI.

They’re arguing about prompts, which model is “best,” whether voice is faster than typing, and whether agents are real yet. That’s like arguing about keyboard shortcuts when the actual shift is that you now have a new kind of capacity on tap: draft, analyze, critique, plan, generate, and increasingly take actions.

So yes — you’re probably using AI wrong.

Not because you’re not clever enough. Because you’re treating AI like software, when it’s closer to capacity. And capacity without discipline doesn’t compound advantage — it compounds mistakes.

Here’s how people are using AI wrong in 2026, and what to do instead.

1) You’re using AI wrong if you treat it like a feature, not a meta tool

Most people use AI to do a task. The better move is to use AI to upgrade how you do tasks.

That’s the “meta tool” idea: AI as the tool you use to learn other tools, redesign workflows, and turn fuzzy work into explicit work. Instead of “write this email,” it’s “design me a repeatable outreach system, define decision rules, and build a checklist.”

Where people go wrong: they treat each chat like a one-off. They keep rediscovering the same insights and losing the context that actually creates leverage.

Use AI correctly:

- Ask it to extract your criteria (“What are my hidden requirements?”).

- Ask it to turn tribal knowledge into artifacts (playbooks, checklists, templates).

- Ask it to improve the system, not just the output.

Try this: “Don’t answer yet. Interview me until you understand what ‘good’ means. Then propose a repeatable workflow, not just a one-time output.”

2) You’re using AI wrong if you use it to answer instead of interrogate

Most people want AI to be confident. Power users want it to be skeptical.

If you only use AI to generate, it will politely fill gaps with assumptions. That feels smooth. It’s also how you get plausible nonsense and shallow reasoning.

Where people go wrong: they treat AI as a mirror.

Use AI correctly:

- Have it argue against you.

- Make it surface assumptions before drafting.

- Force it to ask clarifying questions until ambiguity collapses.

- Use roles: “skeptical CFO,” “angry customer,” “regulator,” “red team.”

Try this: “List the top 10 ways this could fail in practice. Then propose mitigations and what evidence would change your mind.”

3) You’re using AI wrong if you optimize for output instead of correctness

AI makes it cheap to generate work. It does not make it cheap to be right.

That’s the trap: teams celebrate speed and volume — more drafts, more code, more tickets — without building the verification layer that turns speed into trust.

Where people go wrong: review and validation are treated as a human afterthought.

Use AI correctly:

- Define tiers of correctness: draft-only vs review-required vs validation-required.

- Create rubrics so humans and AI share a definition of quality.

- Treat prompts and agent workflows like software: test, regression-test, deploy.

- Monitor drift: “it worked last month” is not a safety plan.

Try this: “Create a quality rubric, score your output, identify failure conditions, then revise until it passes.”

4) You’re using AI wrong if you think voice is the breakthrough (it’s iteration)

Voice is useful. It gets messy context out quickly. But the breakthrough isn’t voice. It’s what you do next.

The real value is the loop: externalize → structure → tighten constraints → generate options → critique → decide.

Where people go wrong: they dump context and accept the first coherent answer.

Use AI correctly:

- Use voice to brainstorm, then force structure.

- Convert conversation into decisions, constraints, open questions, next actions.

- End with: “What did we assume? What did we not verify?”

Try this: “Summarize into goals, non-goals, constraints, open questions, decision criteria. Ask only questions that materially change the solution.”

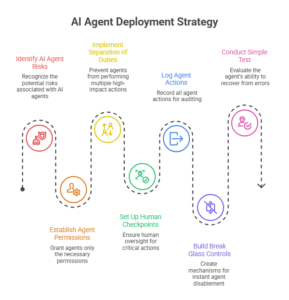

5) You’re using AI wrong if you scale agents before you scale controls

Agents are real enough to matter — and risky enough to demand discipline.

The moment AI can act (not just suggest), your risk profile changes. Now you’re managing a system that can touch tools, data, and workflows — and make mistakes at machine speed.

Where people go wrong: they build “autonomous” systems before they can answer: Who can do what? Under what conditions? With what audit trail? With what rollback?

Use AI correctly:

- Give each agent least privilege (only the data/tools it truly needs).

- Enforce separation of duties (no agent should request + approve + execute high-impact actions).

- Put human checkpoints on blast radius (production, money, customer comms, identity/access).

- Log everything: replayable action traces.

- Build “break glass” controls: instant disable + rollback.

Simple test: If an agent makes a bad change today, can you explain why, reverse it fast, and prove who authorized it?

6) You’re using AI wrong if you treat context like a personal trick instead of organizational infrastructure

The “meta tool” only compounds when context becomes reusable.

In most organizations, the best AI workflows live in a few people’s chats. That means the value is trapped and fragile. Everyone else reinvents the wheel.

Where people go wrong: they confuse personal productivity with organizational capability.

Use AI correctly:

- Build a context spine: definitions, policies, decision logs, canonical docs, quality rubrics.

- Create shared libraries of approved prompts, templates, and workflows (versioned like code).

- Decide sources of truth for retrieval and what must be ignored.

- Classify data: what can be used broadly, what requires controls, what is prohibited.

7) You’re using AI wrong if you ignore incentives and change management

Adoption fails less from model quality and more from human behavior:

- People hide AI usage (fear, unclear policy).

- Managers reward volume over outcomes.

- There’s no shared “definition of done.”

Where people go wrong: they roll out tools without redesigning jobs and norms.

Use AI correctly:

- Normalize disclosure: “AI-assisted” is allowed — and required for high-stakes work.

- Redefine performance: reward quality, judgment, and verification, not output volume.

- Train to standards (rubrics, risk tiering, escalation), not “prompt hacks.”

- Build a learning loop: postmortems for AI incidents, not blame.

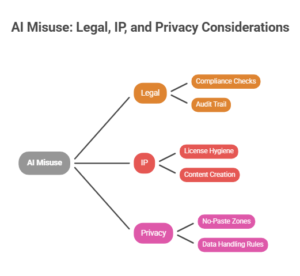

8) You’re using AI wrong if you treat legal, IP, and privacy as afterthoughts

Most AI failures aren’t dramatic. They’re quiet:

- someone pastes sensitive data into the wrong place

- customer emails are generated without compliance checks

- code is produced without license hygiene

- outputs can’t be audited later

Where people go wrong: “we’ll handle governance later” becomes “we can’t safely scale.”

Use AI correctly:

- Set clear “no-paste zones” and data handling rules.

- Require traceability for regulated or customer-facing outputs.

- Establish IP hygiene for AI-generated code and content.

- Keep an audit trail: what data was used, what model, what prompts, who approved.

(You don’t need legal theater. You need operational rules.)

9) You’re using AI wrong if you don’t design human-in-the-loop as a system

“Human-in-the-loop” is not one person reviewing at the end. At scale, that becomes a bottleneck and a fig leaf.

Where people go wrong: they rely on heroic reviewers to catch everything.

Use AI correctly:

- Route reviews by risk tier (low-risk fast lane, high-risk gated lane).

- Add structured reviews (rubrics + checklists), not subjective thumbs-ups.

- Create escalation paths for ambiguity and uncertainty.

- Use sampling and monitoring for high-volume workflows.

The goal is simple: increase throughput without collapsing trust.

10) You’re using AI wrong if you don’t measure “cost per correct outcome”

Multi-agent parallelism sounds like free leverage. It isn’t.

When you scale, you pay in:

- inference cost

- coordination overhead

- review/verification workload

- remediation when wrong

If you don’t track unit economics, you’ll scale something expensive that doesn’t move outcomes.

Where people go wrong: they measure outputs, not results.

Use AI correctly:

- Pick bounded, repeatable workflows first.

- Track end-to-end: cycle time, error rate, rework, incidents, and cost per completed task.

- Scale only when quality and economics stabilize.

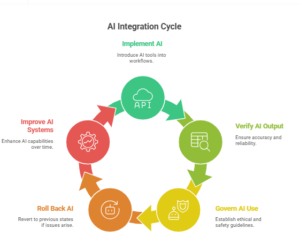

The real conclusion: you’re using AI wrong if you didn’t redesign the work

The winners won’t be the ones who “use AI more.” They’ll be the ones who build systems where AI can produce fast and be verified reliably, act safely, and improve over time.

So here’s the leadership prompt that actually matters:

Where are you letting AI accelerate the organization faster than your ability to verify, govern, and roll back?

If you can answer that — and build the operating model behind it — you’re no longer “using AI.” You’re building an AI-native organization.

Leave a Reply